A discussion on containers innovation and next developments in automated and extensible IT infrastructure container solutions for hybrid IT and multicloud.

Listen

to the podcast. Find it on iTunes. Download the transcript. Sponsor: Hewlett Packard Enterprise.

Dana Gardner: Hello,

and welcome to the next edition of the BriefingsDirect

Voice of the Innovator podcast series. I’m Dana Gardner, Principal Analyst at Interarbor Solutions, your host and

moderator for this ongoing discussion on the latest insights into hybrid cloud and composability

strategies.

|

| Gardner |

Openness, flexibility, and

speed to distributed deployments have been top drivers of the steady growth of container-based

solutions. Now, IT operators are looking to increase automation, built-in

intelligence, and robust management as they seek container-enabled hybrid cloud

and multicloud approaches for data and workloads.

Stay with us now as we examine

the rapidly evolving containers innovation landscape and learn about the next

chapter of automated and extensible IT infrastructure solutions with Mark Linesch, Vice President

of Technology Strategy in the CTO Office and Hewlett

Packard Labs at Hewlett

Packard Enterprise (HPE).

Welcome to BriefingsDirect, Mark.

Mark Linesch: Thanks,

Dana. It’s great to be here.

Gardner: Let’s

look at the state of the industry around containers. What

are the top drivers for containers adoption now that the technology has matured?

Containers catch on

Linesch: The

history of computing, as far back as I can remember, has been about abstraction;

abstraction of the infrastructure and then a separation of concern between the

infrastructure and the applications.

|

| Linesch |

It used to be it was all bare

metal, and then about a decade ago, we went on the journey to virtualization. And

virtualization is great, it’s an abstraction that allows for certain amount of

agility. But it’s fairly expensive because you are virtualizing the entire

infrastructure, if you will, and dragging along a unique operating system (OS)

each time you do that.

So the industry for the last

few years has been saying, “Well, what’s next, what’s after virtualization?” And

clearly things like containerization are starting to catch hold.

Why now? Well, because we are

living in a hybrid cloud world, and we are moving pretty aggressively toward a

more distributed edge-to-cloud world. We are going to be computing, analyzing,

and driving intelligence in all of our edges -- and all of our clouds.

Things such as performance-

and developer-aware capabilities, DevOps,

the ability to run an application in a private cloud and then move it to a

public cloud, and being able to drive applications to edge environments on a harsh

factory floor -- these are all aspects of this new distributed computing

environment that we are entering into. It’s a hybrid estate, if you will.

Containers have advantages for

a lot of different constituents in this hybrid estate world. First and foremost

are the developers. If you think about development and developers in general,

they have moved from the older, monolithic and waterfall-oriented approaches to

much more agile

and continuous

integration and continuous

delivery models.

And containers give developers

a predictable environment wherein they can couple not only the application but

the application dependencies, the libraries, and all that they need to run an application

throughout the DevOps lifecycle. That means from development through test,

production, and delivery.

Containers carry and encapsulate

all of the app’s requirements to develop, run, test, and scale. With bare metal

or virtualization, as the app moved through the DevOps cycle, I had to worry

about the OS dependencies and the type of platforms I was running that pipeline

on.

Developers’ package deal

A key

thing for developers is they can package the application and all the

dependencies together into a distinct manifest. It can be version-controlled

and easily replicated. And so the developer can debug and diagnose across different

environments and save an enormous amount of time. So developers are the first

beneficiaries, if you will, of this maturing containerized environment.

And increasingly in this more

hybrid distributed edge-to-cloud world, I can run those containers virtually

anywhere. I can run them at the edge,

in a public cloud, in a private cloud, and I can move those applications

quickly without all of these prior dependencies that virtualization or bare

metal required. It contains an entire runtime environment and application, plus

all the dependencies, all the libraries, and the like.

The third area that’s interesting

for containers is around isolation. Containers virtualize the CPU, the memory,

storage network resources – and they do that at the OS level. So they use

resources much more efficiently for that reason.

Unlike virtualization, which includes your entire OS as well as the application, containers run on a single OS. Each container shares the OS kernel with other containers so it's lightweight, uses less resources, and spins up instantly.

Unlike virtualization, which includes your entire OS as well as the application, containers run on a single OS. Each container shares the OS kernel with other containers, so it’s lightweight, uses much fewer resources, and spins up almost instantly -- in seconds versus virtual machines (VMs) that spin up in minutes.

When you think about this fast-paced,

DevOps world we live in -- this increasingly distributed hybrid estate from the

many edges and many clouds we compute and analyze data in -- that’s why

containers are showing quite a bit of popularity. It’s because of the business

benefits, the technical benefits, the development benefits, and the operations

benefits.

Gardner: It’s been

fascinating for me to see the portability and fit-for-purpose containerization benefits,

and being able to pass those along a DevOps continuum. But one of the things

that we saw with virtualization was that too much of a good thing spun out of

control. There was sprawl,

lack of insight and management, and eventually waste.

How do we head that off with

containers? How do containers become manageable across that entire hybrid

estate?

Setting the standard

Linesch: One way

is standardizing the container formats, and that’s been coming along fairly

nicely. There is an initiative called The

Open Container Initiative, part of the Linux Foundation, that develops to the

industry standard so that these containers, formats, and runtime software

associated with them are standardized across the different platforms. That

helps a lot.

Number two is using a standard

deployment option. And the one that seems to be gripping the industry is Kubernetes. Kubernetes is an open

source capability that provides mechanisms for deploying, maintaining, and scaling

containerized applications. Now, the combination of the standard formats from a

runtime perspective with the ability to manage that with capabilities like Mesosphere or Kubernetes

has provided the tooling and the capabilities to move this forward.

Gardner: And

the timing couldn’t be better, because as people are now focused on as-a-service

for so much -- whether it’s an application, infrastructure, and increasingly,

entire data centers -- we can focus on the business benefits and not the

underlying technology. No one really cares whether it’s running in a virtualized

environment, on bare metal, or in a container -- as long as you are getting the

business benefits.

Linesch: You

mentioned that nobody really cares what they are running on, and I would

postulate that they shouldn’t care. In other words, developers should develop,

operators should operate. The first business benefit is the enormous agility

that developers get and that IT operators get in utilizing standard

containerized environments.

This is very important when

you think about IT

composability in general because the combination of containerized

environments with things like composable

infrastructure provides the flexibility and agility to meet the needs of

customers in a very time sensitive and very agile way.

Gardner: How

are IT operators making a tag team of composability and containerization? Are

they forming a whole greater than the sum of the parts? How do you see these

two spurring innovation?

Linesch: I have

managed some of our R and D centers. These are usually 50,000-square-foot data

centers where all of our developers and hardware and software writers are off doing

great work.

And we did some

interesting things a few years ago. We were fully virtualized, a kind of private

cloud environment, so we could deliver infrastructure-as-a-service (IaaS)

resources to these developers. But as hybrid cloud hit and became more of a mature

and known pattern, our developers were saying, “Look, I need to spin this stuff

up more quickly. I need to be able to run through my development-test pipeline

more effectively.”

And containers-as-a-service

was just a super hit for these guys. They are under pressure every day to

develop, build, and run these applications with the right security,

portability, performance, and stability. The containerized systems -- and being

able to quickly spin up a container, to do work, package that all, and then move

it through their pipelines -- became very, very important.

From an infrastructure operations

perspective, it provides a perfect marriage between the developers and the

operators. The operators can use composition and things like our HPE Synergy

platform and our HPE OneView

tooling to quickly build container image templates. These then allow those

developers to populate that containers-as-a-service infrastructure with the

work that they do -- and do that very quickly.

Gardner: Another

hot topic these days is understanding how a continuum will evolve between the

edge deployments and a core cloud, or hybrid cloud environment. How do

containers help in that regard? How is there a core-to-cloud and/or core-to-cloud-to-edge

benefit when containers are used?

Gaining an edge

Linesch: I

mentioned that we are moving to a much more distributed computing environment,

where we are going to be injecting intelligence and processing through all of

our places, people, and things. And so when you think about that type of an

environment, you are saying, “Well, I’m going to develop an application. That

application may require more microservices or more modular architecture. It may

require that I have some machine learning (ML)

or some deep learning analytics as part of that application. And it may then

need to be provisioned to 40 -- or 400 -- different sites from a geographic

perspective.”

When you think about edge-to-cloud,

you might have a set of factories in different parts of the United States. For

example, you may have 10 factories all seeking to develop inferencing and analyzed

actions on some type of an industrial process. It might be video cameras

attached to an assembly line looking for defects and ingesting data and analyzing

that data right there, and then taking some type of a remediation action.

Then, how do I package up all

of those application bits, analytics bits, and ML bits? How do I provision that

to those 10 factories? How do I do that in a very fast and fluid way?

That’s where containers really shine. They will give you bare-metal performance. They are packaged and portable – and that really lends itself to the fast-paced delivery and delivery cycles required for these kinds of intelligent edge and Internet of Things (IoT) operations.

Gardner: We

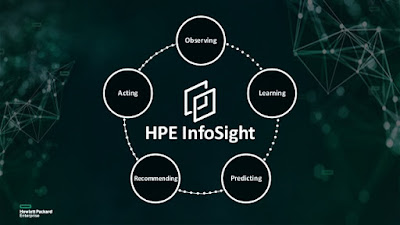

have heard a lot about AIOps and

injecting more intelligence into more aspects of IT infrastructure,

particularly at the June HPE

Discover conference. But we seem to be focusing on the gathering of the

data and the analysis of the data, and not so much on the what do you do with

that analysis – the execution based on the inferences.

It seems to me that containers

provide a strong means when it comes to being able to exploit recommendations

from an AI engine and then doing something -- whether to deploy, to migrate, to

port.

Am I off on some rough tangent?

Or is there something about containers -- and being able to deftly execute on

what the intelligence provides -- that might also be of benefit?

Linesch: At

the edge, you are talking about many applications where a large amount of data

needs to be ingested. It needs to be analyzed, and then take a real-time action

from a predictive maintenance, classification, or remediation perspective.

We are seeing the benefits of containers really shine in these more distributed edge-to-cloud environments. At the edge, many apps need a large amount of data ingested. The whole cycle time of ingesting data, analyzing it, and taking some action back is highly performant with containers.

And so containers spin up very quickly. They use very few resources. The whole cycle-time of ingesting data, analyzing that data through a container framework, taking some action back to the thing that you are analyzing is made a whole lot easier and a whole lot performant with less resources when you use containers.

Now, virtualization still has a

very solid set of constituents, both at the hybrid cloud and at the intelligent

edge. But we are seeing the benefits of containers really shine in these more

distributed edge-to-cloud environments.

Gardner: Mark,

we have chunked this out among the developer to operations and deployment, or

DevOps implications. And we have talked about the edge and cloud.

But what about at the larger

abstraction of impacting the IT organization? Is there a benefit for

containerization where IT is resource-constrained when it comes to labor and

skills? Is there a people, skills, and talent side of this that we haven’t yet

tapped into?

Customer microservices support

Linesch: There

definitely is. One of the things that we do at HPE is try to help customers

move into these new models like containers, DevOps, and continuous integration

and delivery. We offer a set of services that help customers,

whether they are medium-sized customers or large customers, to think

differently about development of applications. As a result, they are able to

become more agile and microservices-oriented.

Microservice-oriented

development really lends itself to this idea of containers, and the ability

of containers to interact with each other as a full-set application. What you

see happening is that you have to have a reason not to use containers

now.

Our customers are moving to a

more continuous integration-continuous development approach. And we can show

them how to manage and operate these types of environments with high automation

and low operational cost.

Gardner: A lot

of the innovation we see along the lines of digital transformation at a

business level requires taking services and microservices from different

deployment models -- oftentimes multi-cloud, hybrid cloud, software-as-a-service

(SaaS) services, on-premises, bare metal, databases, and so forth.

Are you seeing innovation

percolating in that way? If you have any examples, I would love to hear them.

Linesch: I am

seeing that. You see that every day when you look at the Internet. It’s a

collaboration of different services based on APIs. You collect a set of

services for a variety of different things from around these Internet endpoints,

and that’s really as-a-service. That’s what it’s all about -- the

ability to orchestrate all of your applications and collections of service

endpoints.

Furthermore, beyond

containers, there are new as-a-function-based, or serverless, types of

computing. These innovators basically say, “Hey, I want to consume a service

from someplace, from an HTTP endpoint, and I want to do that very quickly.”

They very effectively are using service-oriented methodologies and the model of

containers.

One of the principles that we are focused on is moving the compute to the data -- as opposed to moving the data to the compute. And the reason for that is when you move the compute to the data, it’s a lot easier, simpler, and faster -- with less resources.

I recommend that companies not

stand on the sidelines but to get

busy, get to a proof of concept with containers-as-a-service. We have a lot

of expertise here at HPE. We have a lot of great partners, such as Docker, and

so we are happy to help and engage.

Gardner: And a big thank you as well to our audience for joining this BriefingsDirect Voice of the Innovator interview. I’m Dana Gardner, Principal Analyst at Interarbor Solutions, your host for this ongoing series of Hewlett Packard Enterprise-sponsored discussions.

We are seeing a lot of

innovation in these function-as-a-service

(FaaS) capabilities that some of the public clouds are now providing. And we

are seeing a

lot of innovation in the overall operations at scale of these hybrid cloud

environments, given the portability of containers.

At HPE, we believe the cloud

isn’t a place -- it’s an experience. The utilization of containers provides a

great experience for both the development community and the IT operations

community. It truly helps better support the business objectives of the

company.

Investing in intelligent innovation

Gardner: Mark,

for you personally, as you are looking for technology strategy, how do you

approach innovation? Is this something that comes organically, that bubbles up?

Or is there a solid process or workflow that gets you to innovation? How do you

foster innovation in your own particular way that works?

Linesch: At HPE,

we have three big levers that we pull on when we think about innovation.

The first is we can do a lot

of organic development -- and that’s very important. It involves

understanding where we think the industry is going, and trying to get ahead of

that. We can then prove that out with proof of concepts and incubation kinds of

opportunities with lead customers.

We also, of course, have a

lever around inorganic innovation. For example, you saw recently an acquisition

by HPE of Cray to turbocharge the next generation of high-performance

computing (HPC) and to drive the next generation of exascale computing.

The third area is our partnerships

and investments. We have deep

collaboration with companies like Docker, for example. They have been a

great partner for a number of years, and we have, quite frankly, helped to

mature some of that container management technology.

We are an active member of the

standards organizations around the containers. Being able to mature the

technology with partners like

Docker, to get at the business value of some of these big advancements is

important. So those are just three ways we innovate.

Longer term, with other HPE core

innovations, such as composability and memory-driven computing, we believe that

containers are going to be even more important. You will be able to hold the

containers in memory-driven

computing systems, in either Dynamic

random-access memory (DRAM) or storage-class

memory (SCM).

You will be able to spin them up

instantly or spin them down instantly. The composition capabilities that we

have will increasingly automate a very significant part of bringing up such systems,

of bringing up applications, and really scaling and moving those applications to

where they need to be.

One of the principles that we are focused on is moving the compute to the data -- as opposed to moving the data to the compute. And the reason for that is when you move the compute to the data, it's a lot easier, simpler, and faster with less resources.

One of the principles that we are focused on is moving the compute to the data -- as opposed to moving the data to the compute. And the reason for that is when you move the compute to the data, it’s a lot easier, simpler, and faster -- with less resources.

This next generation of

distributed computing, memory-driven computing, and composability is really ripe

for what we call containers in microseconds. And we will be able to do

that all with the composability tooling we already have.

Gardner: When

you get to that point, you’re not just talking about serverless. You’re talking

about cloudless. It doesn’t matter where the FaaS is being generated as long as

it’s at the right performance level that you require, when you require it. It’s

very exciting.

Before we break, I wonder what

guidance you have for organizations to become better prepared to exploit containers,

particularly in the context of composability and leveraging a hybrid continuum

of deployments? What should companies be doing now in order to be getting

better prepared to take advantage of containers?

Be prepared, get busy

Linesch: If

you are developing applications, then think deeply about agile development

principles, and developing applications with a microservice-bent is very, very

important.

If you are in IT operations,

it’s all about being able to offer bare metal, virtualization, and containers-as-a-service

options -- depending on the workload and the requirements of the business.

We have quite a bit of on-boarding

and helpful services along the journey. And so jump in and crawl, walk, and run

through it. There are always some sharp corners on advanced technology, but

containers are maturing very quickly. We are here to help our

customers on that journey.

Gardner: I’m

afraid we’ll have to leave it there. We have been exploring how openness, flexibility,

and speed to distributed deployments have been top drivers for the steady

growth of container-based solutions. And we have learned how IT operators now are

looking to increase automation, built-in intelligence, and robust management as

they seek to take advantage of container-enabled hybrid cloud models.

So please join me in thanking

Mark Linesch, Vice President of Technology Strategy in the CTO Office and Hewlett

Packard Labs at HPE. Thank you so much, Mark,

Linesch: Thank

you, Dana. I really enjoyed the conversation.

Gardner: And a big thank you as well to our audience for joining this BriefingsDirect Voice of the Innovator interview. I’m Dana Gardner, Principal Analyst at Interarbor Solutions, your host for this ongoing series of Hewlett Packard Enterprise-sponsored discussions.

Thanks again for listening.

Please pass this along to your IT community, and do come back next time.

Listen

to the podcast. Find it on iTunes. Download the transcript. Sponsor: Hewlett Packard Enterprise.

A discussion on containers innovation and next developments in automated

and extensible IT infrastructure container solutions for hybrid IT and multicloud. Copyright Interarbor

Solutions, LLC, 2005-2019. All rights reserved.

You may also be

interested in:

- The venerable history of IT systems management meets the new era of AIOps-fueled automation over hybrid and multicloud complexity

- How the Catalyst UK program seeds the next generations of HPC, AI, and supercomputing

- HPE and PTC Join Forces to Deliver Best Outcomes from the OT-IT Productivity Revolution

- How rapid machine learning at the racing edge accelerates Venturi Formula E Team to top-efficiency wins

- The budding storage relationship between HPE and Cohesity brings the best of startup innovation to global enterprise reach

- Industrial-strength wearables combine with collaboration cloud to bring anywhere expertise to intelligent-edge work

- HPE’s Erik Vogel on what's driving success in hybrid cloud adoption and optimization

- IT kit sustainability: A business advantage and balm for the planet

- How total deployment intelligence overcomes the growing complexity of multicloud management